robot read me

reading release notes so you don't have to

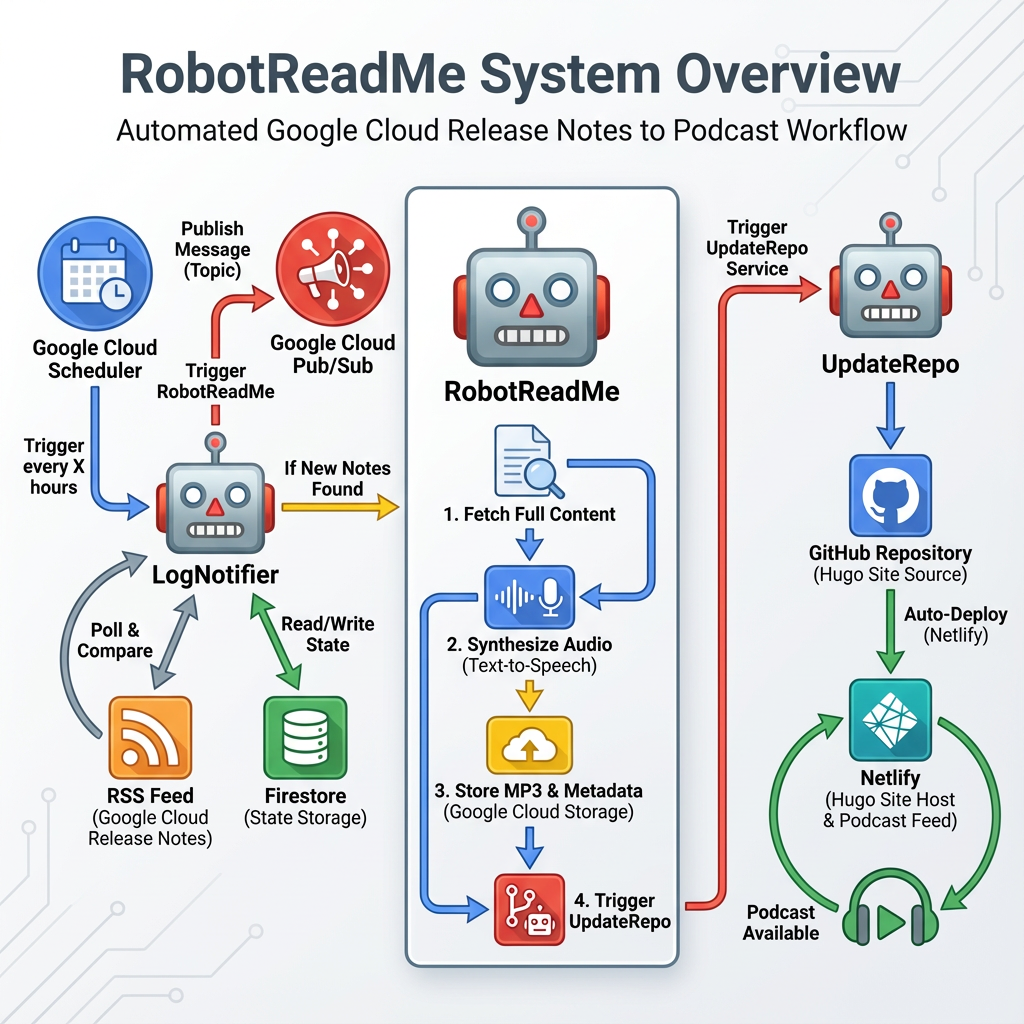

We are excited to share a high-level look at the engine that powers RobotReadMe.

RobotReadMe is an event-driven, serverless architecture designed to autonomously monitor data sources, synthesize multi-media content, and execute multi-channel deployments. It leverages the scalability of Google Cloud Platform to turn raw RSS feeds into polished audio articles and published web content without human intervention.

Architecture Overview

The automated workflow is broken down into three distinct phases:

1. The Trigger

Detection & Signal Initiation

The process begins with precision scheduling. A Cloud Scheduler Cron job acts as the system’s heartbeat, waking up the lognotifier Cloud Run service periodically. This watchdog actively polls external RSS Feeds (e.g., GCP Release Notes) and compares them with the previous state stored in Firestore. If fresh intelligence is detected, the system avoids polling fatigue and immediately publishes a “go” signal to a high-throughput Pub/Sub topic, igniting the parallel processing engine.

2. The Forge

Content Synthesis & Asset Generation

The Pub/Sub message fans out to trigger the core worker services. The primary generator, the robotreadme Cloud Run instance, ingests the raw feed content. It pipes the text through Google’s neural Text-to-Speech API to generate lifelike audio. The resulting assets are meticulously organized: generated MP3s are staged in a dedicated GCS Bucket, while synthesized Markdown (.md) metadata is finalized in a separate entry bucket. Throughout this forging process, execution state is checkpointed in near real-time via Firestore.

3. The Delivery

Multi-Channel Deployment & Broadcast

The final phase ensures maximum visibility through a dual-path deployment.

- The Broadcast: Simultaneously with the forge phase, the

announcemicroservice receives the Pub/Sub trigger and immediately posts an update to external social platforms like Twitter/X. - The Publish: An EventArc trigger watches for object finalization in the entries GCS bucket. Upon detection, it invokes the CI/CD pipeline: triggering Cloud Build to commit the new content to GitHub, which subsequently hits a webhook to force an immediate Netlify (Hugo static site) rebuild. Zero-touch publishing is achieved.

Latest Updates

In addition to our architectural refinements, we’ve recently completed several major milestones to enhance the engine’s reliability and observability:

- Go 1.25 Upgrade: Standardized the entire codebase on the latest Go 1.25 release for improved performance and security.

- Resilience & Error Handling: Implemented robust retry logic for all GCP service interactions (GCS, TTS, Firestore) and replaced legacy exits with structured error handling.

- Observability: Standardized structured JSON logging (

log/slog) across all modules, ensuring consistent and searchable telemetry in Cloud Logging. - Configuration & Validation: Implemented a unified configuration management system using

godotenvwith “fail-fast” startup validation. - Stability & Cleanup: Added graceful shutdown listeners for Cloud Run services and implemented automatic temporary file cleanup.

- Testing Excellence: Created a comprehensive integration testing suite using GCP fakes and expanded coverage for complex edge cases.

Stay tuned for more updates as we continue to automate the world of release notes!